Accelerating Threat Detection Through Engineering

In the ever-evolving landscape of cybersecurity, organizations face numerous threats that can jeopardize cloud infrastructure, user endpoints, sensitive data, and day-to-day operations. To effectively combat these risks, security teams must stay ahead of malicious actors by continuously refining and deploying threat detections. One crucial aspect of this process is source controlling threat detection, as this ensures the integrity, transparency, and collaboration of security operations teams.

Previously, we heavily relied on and placed our faith in our enterprise suite of security technologies, while manually incorporating recently discovered techniques. However, with the continuous discovery of new techniques and the expansion of the MITRE ATT&CK framework, it became evident that a more systematic and automated approach was necessary for our threat detection strategy. That’s when we shifted our focus towards developing a CICD-based framework for our threat detection development and seamlessly integrating it into our day-to-day operations.

Establishing a source control, also known as version control, is a system that tracks changes to files and enables collaboration across multiple contributors. While often associated with software development, its value extends beyond code repositories and plays a vital role in security operations.

Achieving Source Control for Threat Detection

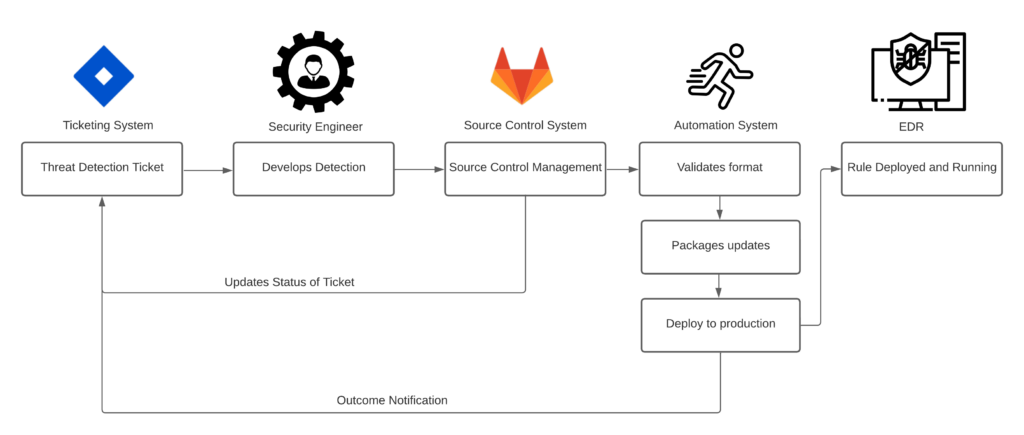

Implementing source control for threat detection involves a high-level flow and time spent developing the integrations to successfully sync our technologies harmoniously. This process encompasses several key steps to have a successful source controlled detection pipeline.

First and foremost, it all starts with a Source Control System (SCS). Choosing a suitable SCS, such as BitBucket or GitLab, based on what’s in place at your organization today. Additional factors will need to be considered like scalability, distributed workflows, and integration capabilities with existing security tools. Once that is finalized, this is where standing up a dedicated repository to house threat detections come into play. Additional security development initiatives can arise from this such as forensic automation scripts, cloud policies, or maintaining configuration files for your SIEM. Once the technologies are stood up, it’s about the process and workflow to ensure that you and your team are following the standard everyone agreed on.

A strategic naming convention for branch names goes a long way when it comes to standardizing your threat detections and reducing the ambiguity of a specific detection. As some detections can be a signal or a higher fidelity chain of events that has occurred warranting a higher severity. Having standards from branch creation, merging, and deployments help minimize errors and maintain the established process that should be procured in standard operating procedure (SOP). This will ultimately establish a base of tracking changes to see who made changes to a particular detection rule. Where team members can collaborate to review, comment, and suggest improvements to deliver better results or enhance searching performance. Though no detection can be deployed, or merged, without proper approvals. This is where we employed a multi-person approval process. This established process allows senior engineers to provide the final say on whether a detection is good or not. This ensures that proper language is being used, reduces the likelihood of redundant rules that others may capture, and ensures that it has been reviewed by two or more senior personnel that it is a good detection rule. Once the heavy legwork is accomplished, it’s about how to automate the deployment process so there are no more manual entries and reduce the amount of human error.

Majority of SCS have in-house integrations to achieve a CICD workflow. These workflows can enable the automation process to test the format and package the code to be deployed in a systematic way. In order to ensure that the changes are validated and can harmoniously sync with the security tooling. Once the changes have passed the testing and validation phase, by leveraging CICD pipelines we can automatically deploy or update rules when changes have been identified in the repo.

Technology Flow

The way BigID has deployed a threat detection pipeline can be shown in the diagram below.

We have a backlog of ideas and detection hypotheses that we want to develop based on news in the industry and other open-sourced technologies like Sigma. From there, we incorporate these ideas into our sprints to develop, test, and deploy to our ever growing detection pipeline utilizing our source controlled managed tools and corresponding processes. As this will ensure we are rolling our reliable and validated detections that cover multiple adversary techniques. Once it is deployed to our EDR system, we constantly check and ensure that the detections are either a) working and detecting what it was intended for or b) not firing at all. Either option provides an opportunity to ensure we are deploying effective signatures to prevent malware from entering our environment.

Lessons Learned

From conceptualization to implementation, the biggest pain was developing the CICD component of our threat detection pipeline. This gave us a learning opportunity for our team on how to develop, connect, and synchronize security technologies by leveraging CICD technologies and ultimately prepare us for our future initiatives.

This also has opened additional opportunities and ideas to create a more effective approach to our threat detection strategy. Where we will be looking at creating a threat detection lab to cover the traditional OS’s but also with containers and Kubernetes systems.

These are the steps BigID has taken to enhance the overall security posture of the organization and increase the amount of detection rules we can deploy across our security technologies. By having this in place, we can operate and collaborate more effectively and efficiently to ensure that BigID systems and networks are secure from the endpoint to the cloud.

Read further on this topic on our blog post on data detection and response.