AI models are only as good as the data that trains them. Most pipelines are messy, incomplete, or noncompliant — putting accuracy, privacy, and safety at risk. BigID helps organizations build secure AI data pipelines by:

-

Classifying structured and unstructured data (including code, chat, and logs) by sensitivity

-

Categorizing datasets with business taxonomies for better context

-

Cataloging with a unified, searchable metadata index

-

Curating training datasets with semantic search for relevance and quality

-

Cleansing and redacting sensitive or toxic data before training

-

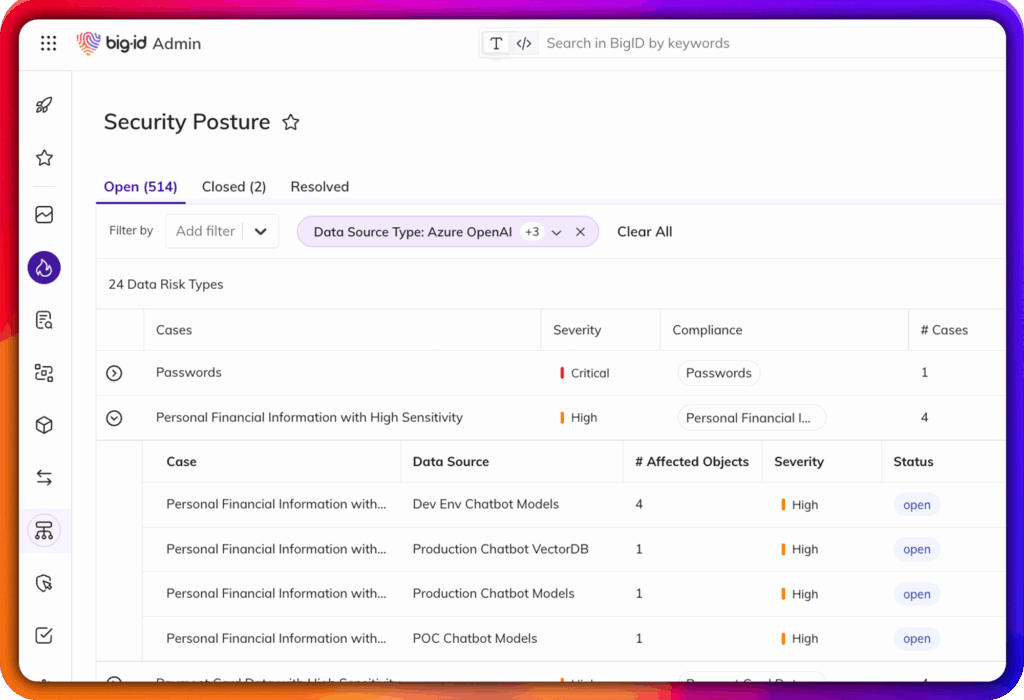

Compliance-checking datasets against global regulations and internal policies

-

Controlling staged data pipelines with policy guardrails and governance