AI-powered chatbots and applications are becoming everyday tools across the enterprise. Employees are pasting prompts, sharing outputs, and building homegrown apps faster than IT teams can govern them. However, with this rapid adoption comes a critical blind spot: sensitive data leaks that hide in plain sight.

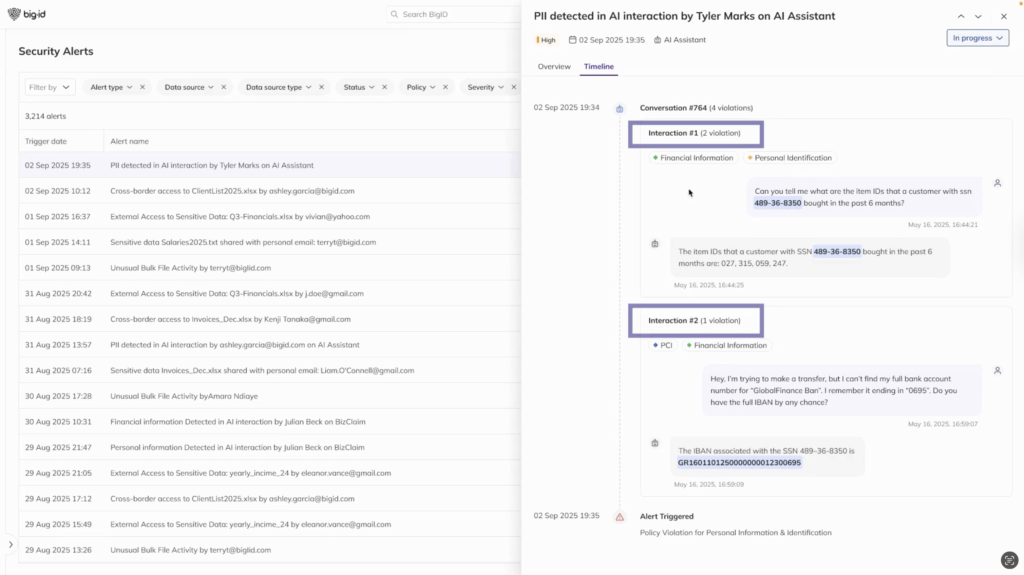

Every conversation, every prompt, and every response is a potential exposure point. From Social Security numbers in customer support chats to IBANs embedded in application outputs, the risk is no longer hypothetical – it’s happening today.

When Conversations Become a Liability

As organizations lean into AI, they face a growing challenge: how to keep conversations safe. Traditional security tools weren’t designed to monitor or protect AI prompts. They can’t catch when an employee pastes financial data into a chatbot or when a model response accidentally includes sensitive information.

This lack of oversight creates real consequences:

- Data leakage that bypasses existing controls

- Compliance violations with GDPR, PCI, HIPAA, and beyond

- Loss of customer trust if private information is mishandled

The reality is that AI prompts are the new frontier of data exposure. Without visibility and safeguards, organizations risk letting sensitive data slip through the cracks.

Access Control for GenAI Conversations

That’s where BigID comes in. With Access Control to Sensitive Data in GenAI Conversations, organizations can finally get visibility, control, and guardrails around sensitive data with respect to AI applications.

BigID continuously detects sensitive data in both prompts and responses from personal identifiers to financial details and beyond. BigID highlights violations, shows which users are involved, and provides a timeline view of risky conversations. Just as importantly, it goes beyond detection with proactive protection: redacting sensitive information, masking PII, and enforcing access controls on the fly.

Unlike legacy monitoring tools, BigID doesn’t just flag risks — it helps stop them, while preserving the context and functionality of AI interactions.

AI Outcomes That Matter

- Visibility into AI interactions – See where sensitive data shows up across prompts and responses.

- Prevent data leakage – Apply masking and redaction policies that keep conversations intact but safe.

- Accelerate investigations – Use violation alerts and timelines to speed up incident response.

- Strengthen AI adoption – Build trust and confidence by reducing risk without slowing down innovation.

Why Now: Guardrails for the AI Era

AI adoption isn’t slowing down. In fact, it’s accelerating – and so are the risks. Organizations that put prompt protection in place today can stay ahead of exposure, regulatory pressure, and evolving AI security threats. BigID is helping enterprises embrace AI with confidence by making data protection actionable, automatic, and built for the future.

Take the Next Step

Learn how BigID’s Access Control for GenAI can help your organization secure conversations, prevent data leaks, and enable safe adoption of AI. Schedule a 1:1 with one of our AI security experts today.