AI agents are no longer experimental tools confined to innovation labs. They are already embedded across enterprise environments—reading files, responding to tickets, provisioning access, generating reports, and initiating remediation actions across critical systems. Their adoption is accelerating because they reduce friction and automate decision-making at scale.

Yet many organizations are deploying these agents under a risky assumption: that existing IAM controls, model security, or high-level AI governance frameworks are sufficient. While those controls remain necessary, they are fundamentally incomplete for autonomous systems that can reason, act, and continuously access and move sensitive data without human intervention.

AI agents are not just models. They are operational actors. And actors operating at machine speed, with persistent access to enterprise data, require a new security discipline—one grounded in data, not just identity or infrastructure. This is the foundation of AI Agent Posture Management.

Autonomous Agents as Invisible Insiders

Security teams are well-versed in managing human risk. They track users, roles, entitlements, and activity to understand who has access to what, and why. That model breaks down when applied to AI agents.

Agents do not log in interactively, request access repeatedly, or experience friction when acting. Once deployed, many operate continuously using service accounts, OAuth apps, or API keys—often outside traditional identity reviews. Over time, they function as permanently privileged insiders.

This challenge is often framed as an AI governance problem, but at its core, it is a data security problem. The primary risk is not the agent itself, but the sensitive, regulated, and business-critical data it can access and act upon.

Defining AI Agent Posture Management

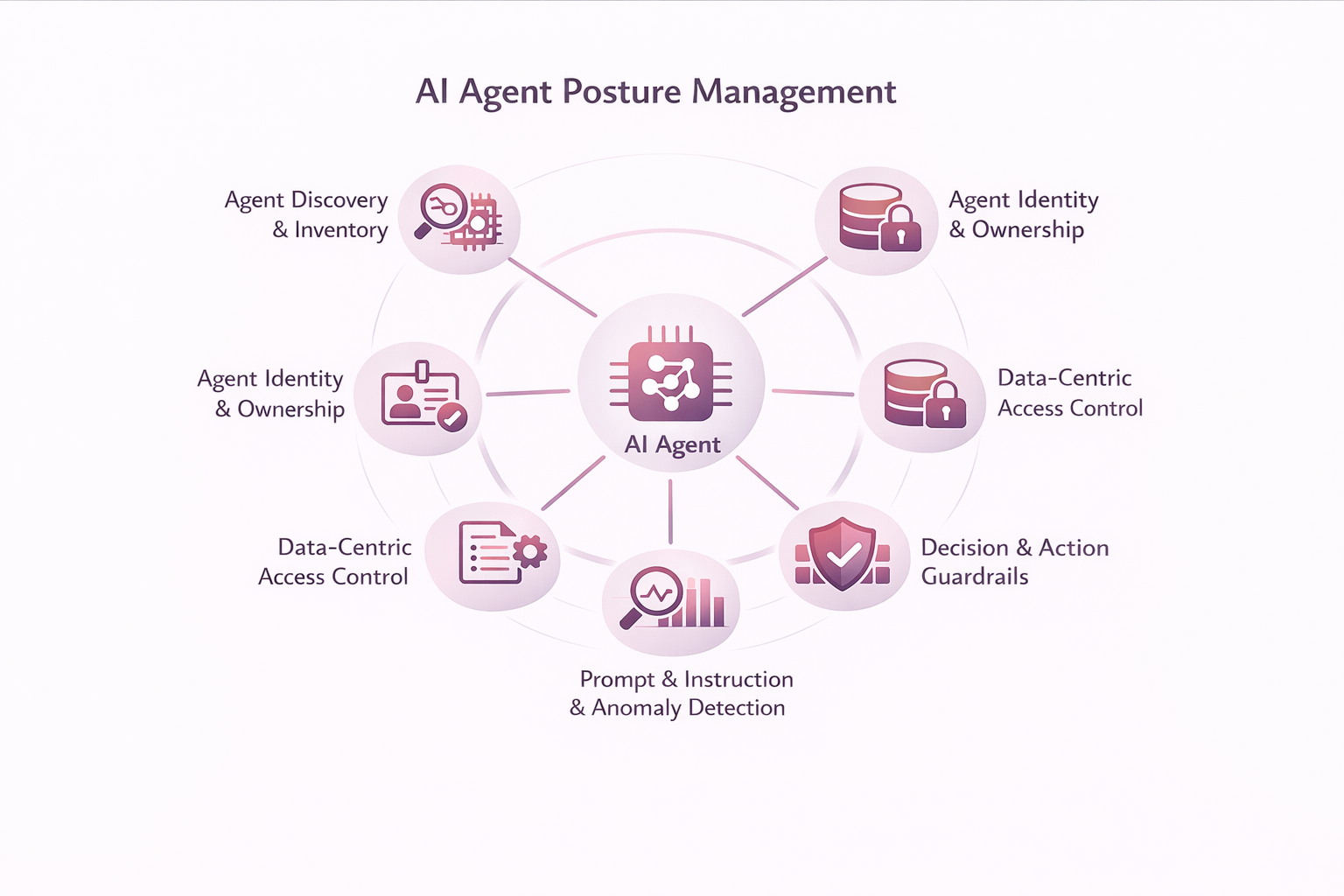

AI Agent Posture Management is the continuous visibility, control, and governance of what AI agents can access, decide, and do across enterprise data. It extends security posture management to autonomous actors whose behavior cannot be fully anticipated at deployment time.

In practice, it enables security leaders to answer questions that identity- or model-centric approaches cannot reliably address:

What data can this agent access, and what data does it actually use? What actions can it take with that data? Is its behavior still aligned with policy and intent? And when something goes wrong, can its actions be explained, constrained, or stopped?

Without data-level visibility and control, these questions remain unanswered—regardless of how well identities are managed or models are governed.

Core Capabilities of AI Agent Posture Management

Agent Discovery and Inventory

AI Agent Posture Management begins with visibility. Organizations cannot govern what they cannot see, and most enterprises lack a complete inventory of the AI agents already operating across their environments.

Agents are created by developers, embedded in SaaS platforms, introduced through automation tools, or enabled by AI features inside existing applications. Many authenticate non-interactively and operate continuously, placing them outside traditional identity inventories.

Agent Discovery establishes the foundation for posture management by continuously identifying and inventorying agents across the enterprise, including:

- Discovery of AI agents across applications, cloud services, and automation frameworks

- Identification of non-human identities such as service principals, OAuth apps, and API-based access

- Correlation of agents to the data they actually access and modify

- Detection of unmanaged, dormant, or over-privileged agents

Without discovery, agents operate as invisible insiders. With it, posture controls can be applied intentionally and consistently.

Agent Identity and Ownership

Once discovered, agents must be owned and accountable. Every AI agent should be treated as a first-class security principal with a defined purpose and scope.

Agent Identity and Ownership ensure accountability by:

- Assigning a unique, auditable identity to each agent

- Defining a clear owner and documented business purpose

- Establishing explicit scope boundaries across systems and data domains

Identity alone, however, is insufficient. Knowing who an agent is does not explain what data it uses or how it behaves over time—which is why identity must be paired with data context.

Agent Classification and Risk Profiling

Discovery and identity do not establish posture on their own. Not all agents carry the same risk, and permissions alone provide an incomplete picture.

Agent Classification and Risk Profiling evaluates agents based on observed behavior, including:

- The sensitivity and regulatory nature of the data accessed

- What data agents actually read, write, or propagate

- The breadth of access across systems and regions

- The agent’s level of autonomy and action authority

Risk profiles must be continuously updated as agents evolve. By grounding classification in real data interaction, organizations can focus controls where they matter most.

Data-Centric Access Control

System-level permissions are too coarse for autonomous agents that interact directly with sensitive information.

Data-Centric Access Control enforces least privilege at the data layer by enabling:

- Visibility into the specific data agents’ access

- Sensitivity-aware access decisions based on classification and risk

- Task-bound and time-bound access instead of standing privileges

Without data-level controls, organizations cannot prevent overexposure—even if identities and permissions appear correct on paper.

Decision and Action Guardrails

AI agents do not stop at analysis—they act. As autonomy increases, so does potential impact.

Decision and Action Guardrails define operational boundaries, including:

- Explicit limits on permitted actions

- Human-in-the-loop approval for high-risk decisions

- Safeguards for irreversible actions, such as deletion or sharing

- Kill switches and rollback paths

Automation without guardrails amplifies risk rather than efficiency.

Prompt and Instruction Governance

Agent prompts and instructions are a critical policy surface that directly influences behavior.

Prompt and Instruction Governance enables organizations to:

- Version and audit agent instructions

- Detect prompt drift or manipulation

- Separate system intent from user-provided context

- Enforce data and compliance policies within agent reasoning

Without visibility into instructions, agent behavior becomes unpredictable and ungovernable.

Continuous Monitoring and Anomaly Detection

Static controls fail in dynamic environments. AI Agent Posture Management requires continuous visibility into agent behavior.

Continuous Monitoring and Anomaly Detection provides:

- Ongoing monitoring of data access and actions

- Behavioral baselining per agent

- Detection of anomalous or policy-violating behavior

- Correlation with identity, data risk, and threat signals

This enables early intervention—before minor deviations become incidents.

The Risks This Discipline Is Designed to Prevent

Without these capabilities, organizations face growing risk: privilege creep, silent data exfiltration through legitimate workflows, prompt injection, and loss of accountability during investigations. When incidents occur, attributing outcomes to “the AI” is neither sufficient nor defensible.

Why AI Agent Posture Management Is Fundamentally About Data

Much of the market focuses on securing models or managing identities. Those approaches are necessary, but they stop short of addressing the most consequential risk surface: how autonomous agents interact with enterprise data over time.

- Identity-only controls cannot see data sensitivity or actual usage.

- Model-only controls cannot govern downstream actions.

Without data context, posture is inferred—not enforced. AI Agent Posture Management must be rooted in a data-first security platform that understands data sensitivity, exposure, and activity in real time.

How BigID Enables Data-First Governance for AI Agents

BigID helps organizations discover and classify sensitive data, understand access and exposure, monitor data activity, and enforce policy-driven remediation at scale. AI Agent Posture Management extends these capabilities to autonomous actors operating across the data estate.

Rather than asking whether an AI model is “safe,” organizations should ask a more practical question: Does our data remain governed when AI is allowed to act? That shift is what enables AI adoption without sacrificing control.

From AI Governance to Agent Posture

AI agents are already operating inside enterprise environments at scale. Organizations that treat them as simple tools will struggle to maintain control. Those that treat agents as identities—governed through data-centric controls—will scale AI safely and responsibly.

AI Agent Posture Management is not a future consideration. It is the next evolution of data security, and defining it early ensures it evolves on your terms—not in response to an incident.

Want to learn more? Schedule a 1:1 with one of our AI and data security experts.