AI is only as trustworthy as the data it’s built on. But in most organizations, there’s no clear way to govern what data should—or shouldn’t—be used in generative AI, copilots, or large language models (LLMs). Sensitive files get pulled into prompts. Proprietary content gets exposed. Policy violations happen before teams even know they need to look.

BigID’s new Data Labeling for AI changes that.

With this launch, security and governance teams can finally classify, label, and control data based on how it’s used in AI. That means fewer surprises, stronger policy enforcement, and more trusted AI—before any data flows into the pipeline.

Why It Matters

Without usage-based labeling, AI tools treat all data the same. And that’s a problem.

Some data is fine to use in training or prompts. Some isn’t. But unless teams can label what’s “AI-approved,” what’s “restricted,” and what’s “prohibited,” there’s no scalable way to enforce AI usage policies—or avoid costly mistakes.

BigID’s Data Labeling for AI solves this with intelligent, automated labeling built directly into the platform.

What It Does

BigID’s Data Labeling for AI lets teams:

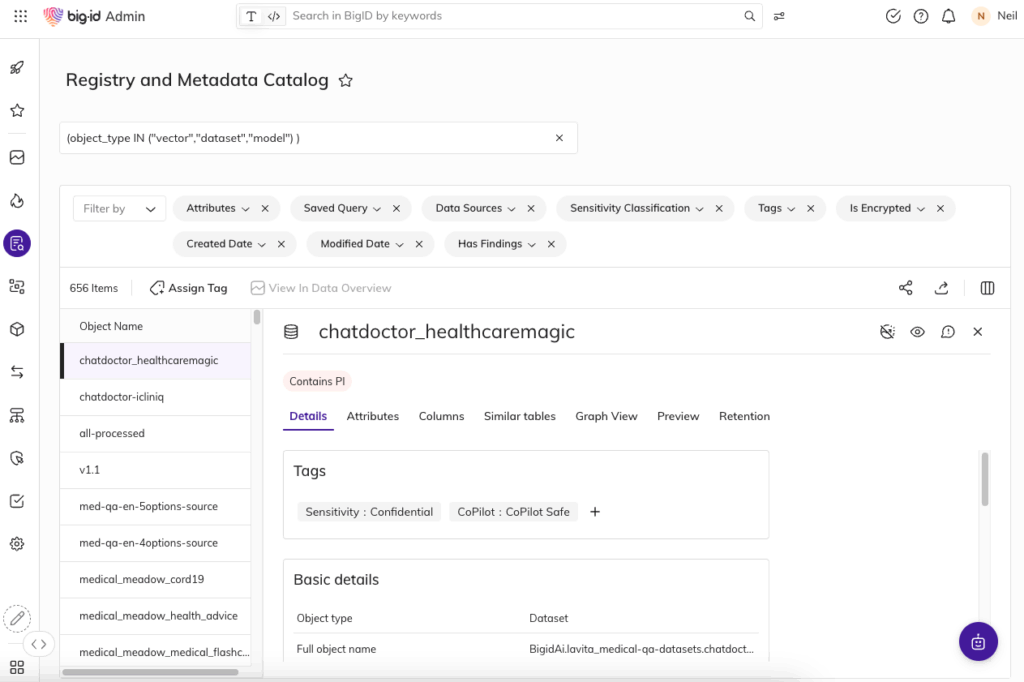

- Classify data by AI eligibility: Automatically label data based on whether it’s allowed for GenAI use, with built-in options like “safe,” “restricted,” or “prohibited.”

- Customize labels to your policies: Define your own rules and risk thresholds to reflect internal governance, regulatory requirements, or industry best practices.

- Apply labels across all data types: From structured systems to SaaS files to collaboration content—labeling works across the enterprise.

- Act on the labels: Use BigID’s policy engine to restrict access, trigger alerts, or block downstream use before data enters prompts, models, or training sets.

Real-World Use Cases

- Prevent data leakage into copilots: Label sensitive content in SharePoint or Google Drive as “prohibited,” and automatically block AI plugins from accessing it.

- Streamline GenAI readiness: Give data scientists a curated, labeled dataset that’s already been cleared for training—no manual triage needed.

- Enforce internal usage policies: Define what’s acceptable for GenAI use, then label and govern accordingly—so your teams stay compliant by default.

Why BigID

BigID is the only platform that unifies discovery, classification, and control for data and AI. With Data Labeling for AI, customers can:

- Operationalize AI governance before issues arise

- Reduce risk of exposure, misuse, and policy violations

- Align AI usage with evolving frameworks and enterprise standards

See It in Action

Get a demo of BigID’s Data Labeling for AI and learn how to take control of your AI pipeline—before your data does it for you. Set up a 1:1 with one of our AI and Data Security experts today!