AI is now embedded in the software you use every day – from HR tools to CRM systems to cloud services. Vendors are rapidly adopting generative AI to boost features and automate tasks. But while the upside is clear, the risks are often hidden.

Most vendors don’t disclose what models they’re using, how those models work, or whether your data is being used to train or fine-tune them. Your data might be powering someone else’s algorithm, without your consent or knowledge. Some vendors even generate outputs that unknowingly expose customer information. And the usage terms that matter most are often buried, vague, or missing entirely.

Even the most mature internal AI governance program won’t cover you if you can’t see what your vendors are doing. This is a growing blind spot and a fast-moving one.

And according to our 2025 AI Risk & Readiness Report, 64% of organizations lack visibility into their AI risks altogether, while nearly half have no AI-specific security controls in place. Only 6% say they have a mature AI security strategy.

Why Traditional Vendor Management Isn’t Enough

Traditional third-party risk tools weren’t built for this. They focus on security certifications, contract language, and compliance checklists. But AI introduces a new kind of exposure: one that blends data privacy, model behavior, explainability, and transparency.

It’s not just about whether a vendor secures your data – it’s whether their AI can explain how it used it. Whether it was trained responsibly. Whether it exposes your organization to bias, hallucinations, or hidden leakage.

A vendor might use an LLM to power “smart” search. But if queries are stored and reused for training, or if synthetic results are generated from previously seen data, your risk grows fast—and quietly. And without proper oversight, you won’t even know it’s happening.

AI has changed how risk works. Now it’s time to change how you manage it.

Bringing AI Transparency to Your Vendor Ecosystem

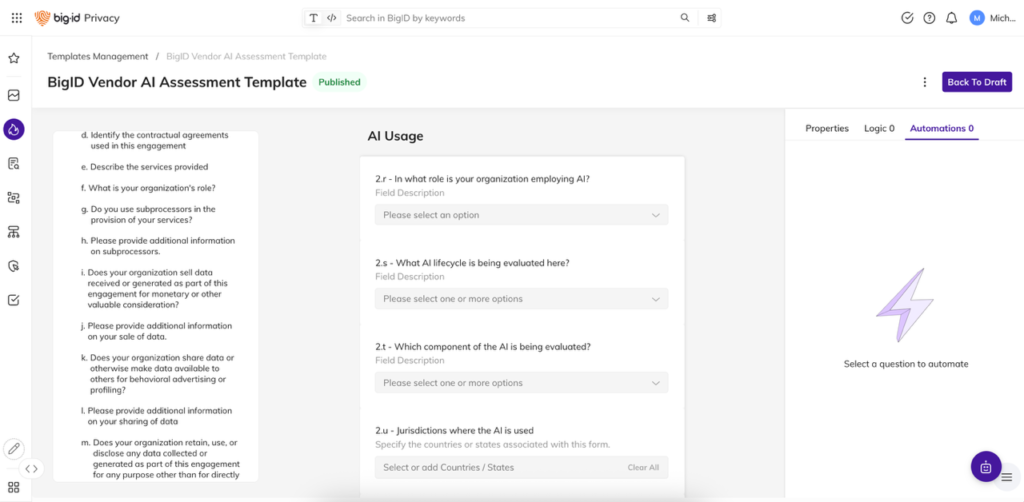

With BigID’s Vendor AI Assessment, you can finally take a structured and comprehensive approach to evaluating vendor AI risk. We help you:

- Discover which vendors are using AI, what types, and for what purposes

- Understand how AI models interact with your data and where exposure might occur

- Identify if your data is used to train, fine-tune, or augment vendor models

- Evaluate the explainability and auditability of vendor AI outputs

- Assign risk scores to vendors based on their AI behaviors and data practices

You don’t need to take a vendor’s word for it. We help you ask the right questions, gather evidence, and create policies for how AI should be used in your vendor ecosystem.

This kind of transparency and accountability isn’t just overdue—it’s essential. Industry experts agree that organizations need to move beyond static surveys and start taking a more proactive approach to third-party AI oversight.

“BigID continues to innovate with Vendor AI Assessment. Given the rapid integration of AI in vendor offerings, businesses must demand transparency and accountability,” said Dr. Edward Amoroso, CEO of TAG and Research Professor at NYU. “BigID’s Vendor AI Assessment provides a crucial tool for organizations to understand and mitigate the unique risks posed by third-party AI use.”

That’s why this capability also supports documentation and audit readiness—so you can respond to regulatory inquiries with confidence and clarity.

Why We Built Vendor AI Assessment

We built Vendor AI Assessment because we recognized this growing blind spot that was not being addressed by current solutions. Organizations have been investing in responsible AI practices internally, but most haven’t extended that same scrutiny to their vendor relationships. The risk is real, and it’s already here.

Our new capability builds on our existing Vendor Management platform, allowing you to assess AI risk specifically. With it, privacy, security, and legal teams can better understand how vendors use AI, what that means for their data, and whether those uses comply with internal policy and external regulation.

We didn’t want to simply ask vendors if they’re using AI. We wanted to help organizations interrogate the type of AI being used, how it’s trained, the data lifecycle behind it, and the governance controls (or lack thereof) surrounding it. This goes far beyond a checkbox – it’s about understanding the actual impact AI has on your risk profile.

Aligning AI Oversight with Vendor Management

Vendor AI Assessment is a natural evolution of our broader vision for vendor oversight. Last year, we introduced a new Vendor Management capability to help customers monitor third-party compliance, automate risk assessments, and track data flows across their vendor ecosystem.

Now, we’re extending that oversight to include AI. As more vendors incorporate generative AI into their products, it’s no longer enough to just assess contracts and certifications. You need to know how their AI systems behave – and what they do with your data.

By embedding AI oversight into vendor governance, we’re helping organizations adapt to a new era of risk: one where AI is everywhere, and where blind trust is no longer an option.

Looking Ahead

AI adoption isn’t slowing down, and neither is the complexity that comes with it. Regulations are catching up, and internal stakeholders, especially privacy, compliance, and AI governance teams, are demanding answers.

It’s time to extend AI oversight beyond the walls of your organization. With Vendor AI Assessment, you can assess vendor AI usage at scale, reduce exposure, and ensure that third-party innovation doesn’t come at the cost of your data security or compliance posture.

Want to learn more? Schedule a 1:1 with one of our AI and data experts today!