Ausbildungsdaten Risiko

-

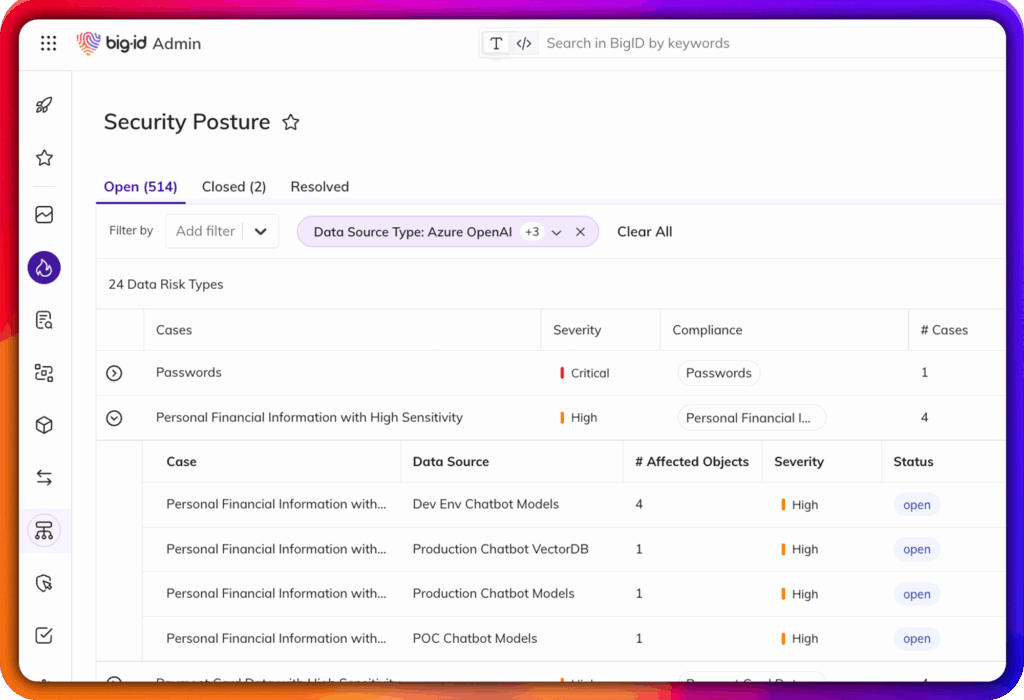

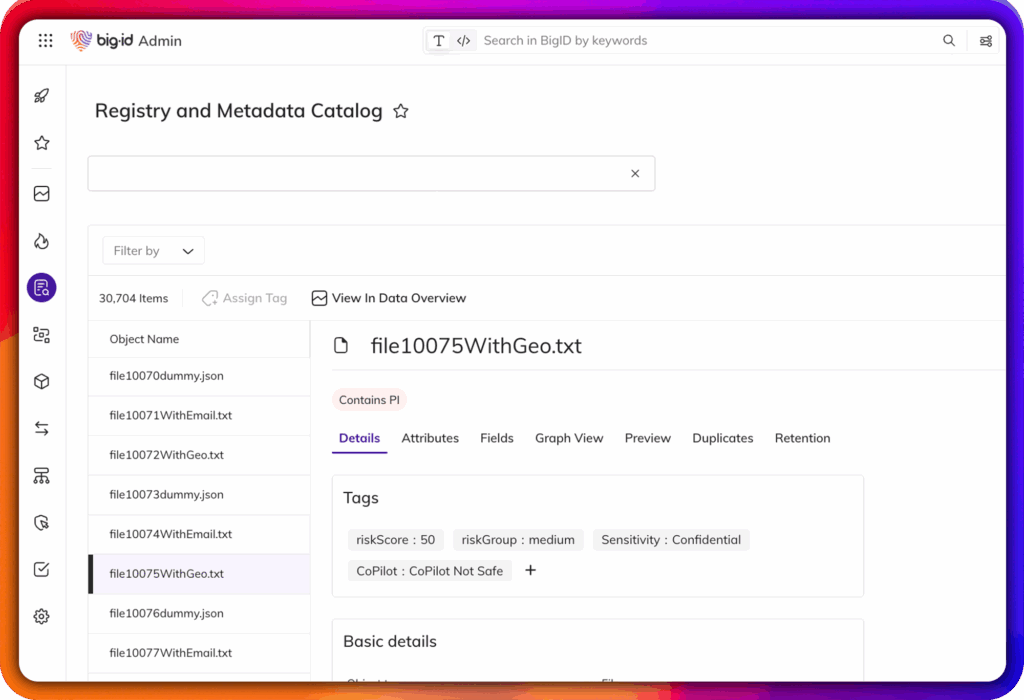

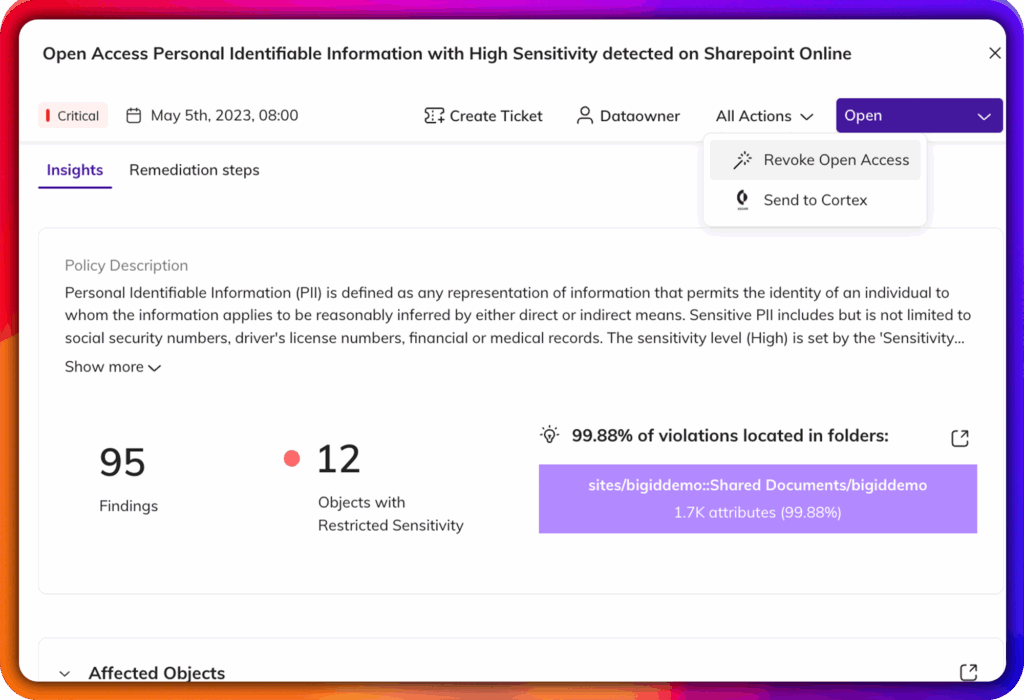

Identifizierung von PII, PHI, Berechtigungsnachweisen und IP in Schulungsdatensätzen

-

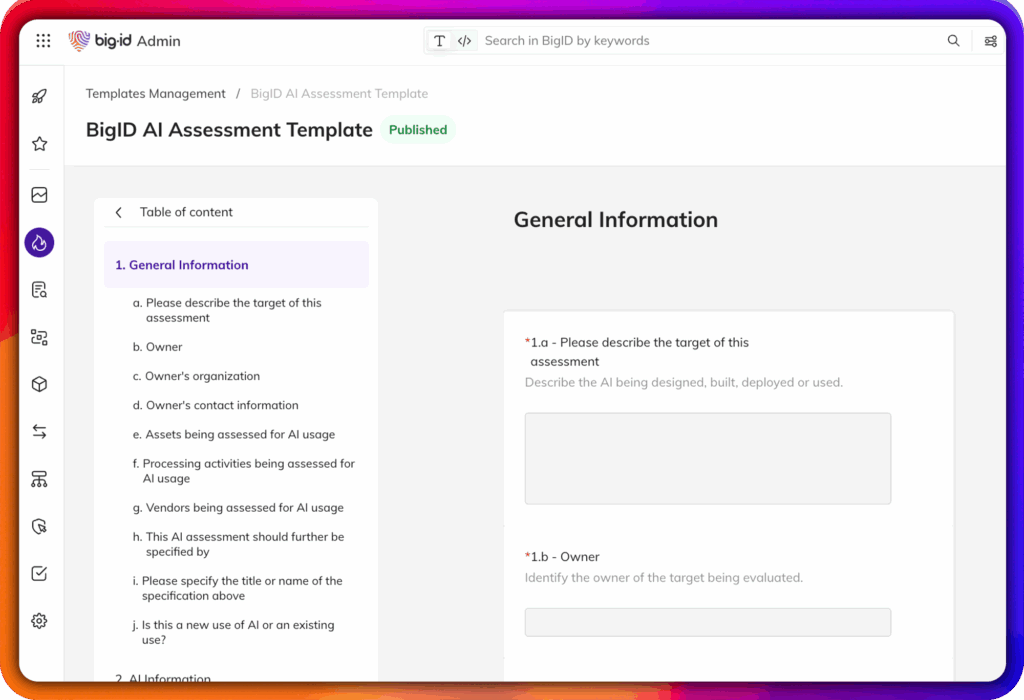

Oberflächenverzerrung, Drift und Verstöße gegen Vorschriften, bevor sie in das Modell einfließen

-

Abbildung des Verlaufs von Rohdaten zu Modellergebnissen zur Unterstützung der Erklärbarkeit