AI Es geht nicht nur um die Transformation Ihres Unternehmens – es geht um eine Neudefinition der Spielregeln für Risiko, Vertrauen und Governance. Der kürzlich stattgefundene BigID Digital Summit brachte Branchenexperten zusammen, um KI-Risiken, Sicherheitsmaßnahmen und Strategien für verantwortungsvolle Innovation zu erörtern. Über 1.100 Teilnehmer waren daran interessiert, sich intensiv mit KI-Governance und Risikominimierung auseinanderzusetzen.

Die Keynote-Session mit Allie Mellen, leitende Analystin bei Forrester Research Die Spezialisierung auf Sicherheit und Risikomanagement bot eine aufschlussreiche Perspektive auf die Herausforderungen und Strategien im Zusammenhang mit Sicherheit von KI und GenAIFür Fachleute im Bereich Cybersicherheit, insbesondere CISOs, bot diese Keynote umsetzbare Erkenntnisse darüber, wie Organisationen sich schützen können. sichere KI-Modelle und Infrastruktur, während gleichzeitig die Vorbereitung auf die Risiken durch zunehmende agentisch Systeme.

Die sich wandelnde Risikolandschaft in der KI-Sicherheit

Allie Mellen begann ihren Vortrag mit der Betonung der dynamischen und sich rasant entwickelnden Natur des KI-Sicherheitsbereichs. Entwicklungen wie Fortschritte bei generativen KI-Anwendungen (GenAI), Hardware-Schwachstellen und die Auswirkungen von Modellen wie … Zwillinge und ChatGPTDie Dringlichkeit, Modelle und Infrastrukturen zu schützen, kann nicht hoch genug eingeschätzt werden.

Mellen wies darauf hin, dass KI-Angriffe derzeit noch akademischer Natur seien und häufig von Forschern ausgingen, die Schwachstellen oder ethische „White-Hat“-Hacking-Techniken untersuchten. Die Landschaft verändere sich jedoch:

Das wird sich nun ändern. Wir werden erleben, wie diese Angriffe deutlich zunehmen… und sich viel stärker nicht nur auf die Modelle selbst, sondern auch auf die zugrunde liegenden Daten und die Infrastruktur, die die Modelle umgibt, konzentrieren.

Wichtigste Schwachstellen und Bedrohungen

Einer der fesselndsten Abschnitte der Keynote war Mellens Rückblick auf die Die 10 größten Bedrohungen laut OWASP für große Sprachmodelle (LLMs) und GenAI Anwendungen. Diese Bedrohungen verdeutlichen potenzielle Angriffsflächen auf jeder Ebene von GenAI-Systemen und heben Schwachstellen hervor, die CISOs beheben müssen.

OWASP GenAI-Bedrohungen:

- Prompt-Injection-Angriffe: Die Möglichkeit, in eine Eingabeaufforderung zu gehen und etwas einzufügen, das zu einer Ausgabe führt, die man sonst nicht erhalten könnte.

- Offenlegung sensibler Informationen: KI-Systeme, insbesondere solche, die mit externen APIs oder Datensätzen integriert sind, können unbeabsichtigt private oder geschützte Daten offenlegen.

- Modell-/Datenvergiftung: Durch Manipulation von Schulungs- oder Betriebsdaten können Angreifer die zugrunde liegende Wissensbasis eines Systems verfälschen, was zu Fehlinformationen oder Fehlentscheidungen führt.

- Überprivilegierte KI-Agenten: Ein wiederkehrendes Thema im Bereich der KI-Sicherheit ist die Wichtigkeit der strikten Kontrolle über die Berechtigungen der Agenten:

Wir müssen sicherstellen, dass KI-Agenten über ein Mindestmaß an Berechtigungen, Fähigkeiten, Werkzeugen und Entscheidungsfähigkeit verfügen.

Risiken in KI-Agentensystemen

KI-Systeme – der wachsende Trend hin zu Systemen, die selbstständig handeln und Entscheidungen treffen können – bergen erhebliche Risiken. Mellen unterteilte diese Risiken in konkrete Kategorien, die Organisationen sorgfältig bewerten sollten:

- Ziel- und Absichtsumgehung: Angreifer können KI-Agenten manipulieren, um deren eigentlichen Zweck zu untergraben.

- Kognitive und Gedächtnisstörungen: Die Verfälschung der Daten oder des Speichers von KI-Modellen kann zu erheblichen Ausfällen, Fehlinformationen und Bedienungsfehlern führen.

- Uneingeschränkte Handlungsfähigkeit: Zu viele Berechtigungen für KI-Agenten könnten die Angriffsfläche vergrößern und Systeme anfällig für unautorisierte Aktionen oder Entscheidungen machen.

- Datenleck: Datenverlustprävention (DLP) ist von entscheidender Bedeutung für schützen sensible Informationen, die von KI-Agenten geteilt oder generiert werdeninsbesondere wenn sie nach außen kommunizieren.

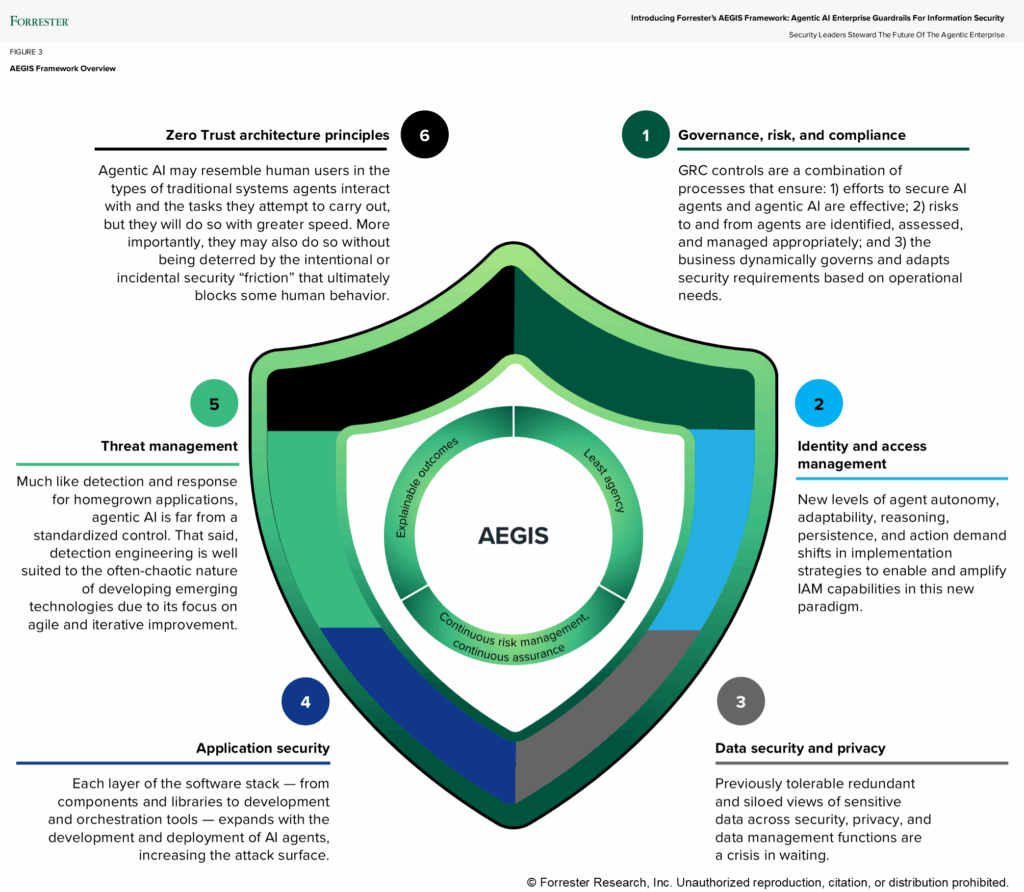

Rahmenwerke und bewährte Verfahren: Das Aegis-Rahmenwerk

Mellen stellte dann die Aegis-Framework – Agentenbasierte KI-Leitplanken für Informationssicherheit – als proaktiver Ansatz zum Umgang mit KI-Risiken. Das Rahmenwerk bietet drei Kernprinzipien, die CISOs in ihre KI-Sicherheitsstrategie integrieren sollten:

- Am wenigsten Agentur: Stellen Sie sicher, dass KI-Agenten nur über die absolut notwendigen Berechtigungen und Zugriffsrechte verfügen, die zur Ausführung bestimmter Aufgaben erforderlich sind.

- Kontinuierliches Risikomanagement: Ein robustes Risikomanagementsystem zur Überwachung, Bewertung und Minderung von Risiken in Echtzeit etablieren.

- Leitplanken und Governance: Strenge Richtlinien und Verfahren zur Kontrolle der Aktionen von KI-Agenten sowie ihrer Kommunikation innerhalb interner Systeme und extern implementieren:

Regelmäßige Modellüberwachung, Eingangs-/Ausgangsvalidierung und Modellschutzmaßnahmen… all dies muss in Ihren Prozess integriert werden, um die Sicherheit zu gewährleisten.

Stärkung des Organisationswissens und der Unternehmensführung

Neben der technischen Umsetzung betonte Mellen die Bedeutung von Schulung und kulturellem Wandel innerhalb von Organisationen. Sicherheitsverantwortliche müssen sich von der Wahrnehmung als Blockierer zu vertrauenswürdigen Beratern entwickeln, die Innovationen fördern.

Setzen Sie auf KI und machen Sie der Organisation unmissverständlich klar, dass Sie den Einsatz von KI befürworten, verstehen und wertschätzen.

Die Schulung von Nicht-Sicherheitsexperten wie Datenwissenschaftlern ist entscheidend für die Förderung von Sicherheitsstandards und Best Practices. Mellen ermutigte CISOs und Sicherheitsteams, ein offenes Umfeld zu schaffen, in dem Fragen zu KI willkommen sind.

Darüber hinaus kann die Stärkung der Rolle von Sicherheitsbeauftragten innerhalb von Data-Science-Teams dazu beitragen, die Lücken zwischen technischer Innovation und sicherer Implementierung zu schließen.

Alles zusammengeführt: Zero Trust und defensive KI

Mit der Erweiterung der KI-Fähigkeiten Umsetzung der Zero-Trust-Prinzipien ist unerlässlich:

Die Idee von geringste Privilegien wird Ihnen erheblich helfen.

Die defensive Nutzung von KI war ein weiterer Schwerpunkt von Mellens Vortrag. Unternehmen müssen die Stärken der KI nutzen, um KI-gestützte Bedrohungen zu erkennen und ihnen entgegenzuwirken. Von der Analyse KI-gestützter Phishing-Kampagnen bis hin zum Management von Insider-Bedrohungen durch ein robustes Identitäts- und Zugriffsmanagement sind proaktive Abwehrmaßnahmen unerlässlich.

Wir müssen KI einsetzen, um uns gegen KI-gestützte Angriffe zu verteidigen. Es gibt keine andere Möglichkeit.

Wo BigID ins Spiel kommt

Für CISOs und Sicherheitsteams beginnt der Schutz von KI mit dem Schutz von Daten. Jedes KI-Modell – ob selbst entwickelt oder zugekauft – basiert auf Daten, die präzise ermittelt, klassifiziert, verwaltet und gesichert werden müssen. Genau hier setzt BigID an.

BigID bietet Sicherheitsverantwortlichen Transparenz, Kontrolle und Informationen, um die Daten, die KI-Systeme speisen, zu schützen. Die Plattform erkennt und klassifiziert automatisch alle sensiblen, regulierten und proprietären Daten in Cloud-, On-Premise- und KI-Pipelines. So können Teams feststellen, welche Daten verwendet werden, wohin sie fließen und wer darauf zugreifen kann.

Mit den KI-gestützten Datensicherheitsfunktionen von BigID können Unternehmen:

- Sensible Daten und KI finden und schützen in Modelltrainingsdatensätzen und Agenteninteraktionen – bevor Expositionsrisiken entstehen.

- Datenlecks erkennen und verhindern mit fortschrittlicher Klassifizierung und kontextbezogenen Richtlinien, die mit Null Vertrauen Prinzipien; umgehender Schutz; und KI-Risikoleitplanken

- Datenzugriff verwalten mit fein abgestuften Kontrollmechanismen, um das Prinzip der minimalen Berechtigungen für KI-Agenten zu gewährleisten und die Datenminimierung für Daten, auf die KI zugreifen kann, durchzusetzen.

- Überwachung und Prüfung von KI-Datenpipelines um Anomalien, Fehlkonfigurationen oder Schattendaten aufzuspüren, die das Risiko erhöhen.

- Operationalisieren Sie die KI-Governance durch die Angleichung von Sicherheits-, Datenschutz- und Compliance-Richtlinien über Teams und Technologien hinweg.

BigID hilft Unternehmen dabei, die Grundlage für sichere, verantwortungsvolle KI – Vertrauen stärken, Risiken minimieren und Innovationen mit Zuversicht beschleunigen.

Kurz gesagt: Bevor Sie KI absichern können, müssen Sie Ihre Daten schützen. BigID macht das möglich.

Allie Mellens Keynote forderte Sicherheitsverantwortliche dazu auf, ihren Ansatz zur KI-Sicherheit zu überdenken. Durch die Nutzung von Frameworks wie Aegis und die Implementierung von Lösungen führender Anbieter wie BigID können Unternehmen ihre KI-gestützten Innovationen schützen und gleichzeitig die Risiken agentenbasierter Systeme und GenAI-Anwendungen bewältigen. In einer Welt, die zunehmend von KI-gestützten Entscheidungen geprägt ist, sind diese Schritte nicht nur Optionen, sondern unerlässlich.

Haben Sie die Live-Diskussion verpasst? Bleiben Sie auf dem Laufenden für zukünftige Ereignisse oder 1:1-Demo planen um Ihr KI-Sicherheitsprogramm zukunftssicher zu gestalten.