Die AI Ära hat kraftvolle Innovationen freigesetzt und neue blinde Flecken der PrivatsphäreDa Modelle enorme Datenmengen verarbeiten und in alltägliche Kundeninteraktionen eingebettet werden, stehen Unternehmen vor einer dringenden Herausforderung: Verhinderung der Offenlegung personenbezogener Daten, gespeichert oder von KI-Systemen missbraucht. Von den Verantwortlichen für Datenschutz, Recht und Daten wird heute erwartet, dass sie sensible Daten in dynamischen und komplexen KI-Pipelines schützen, ohne Innovationen zu blockieren.

Das Problem: Datenschutzrisiken haben traditionelle Kontrollen überholt

Herkömmliche Datenschutzprogramme wurden für statische Umgebungen entwickelt – strukturierte Datenbanken, bekannte Datenflüsse und vorhersehbare Zugriffe. KI-Systeme halten sich jedoch nicht an diese Regeln. Sie nutzen unterschiedliche Datentypen, sind oft nicht nachverfolgbar und können durch Schlussfolgerungen unbeabsichtigt vertrauliche Informationen preisgeben.

Zu den wichtigsten Risikobereichen zählen:

- Training an Datensätzen ohne entsprechende Einwilligung oder Klassifizierung

- Generative Modelle verlieren unbeabsichtigt Daten PII in Ausgängen

- Schatten-KI und dezentrales Experimentieren, das blinde Flecken einführt

- Steigender globaler Regulierungsdruck (EU-KI-Gesetz, CPRA, DSGVO-KI-Richtlinien)

Was hat sich geändert: KI-Modelle sind nicht mehr nur Software – sie sind datengesteuerte Systeme, die eine kontinuierliche Steuerung und Überwachung des Datenschutzes erfordern.

Ein strategischer Rahmen für den KI-Datenschutz

Um vertrauenswürdige und konforme KI-Systeme zu entwickeln, müssen Datenschutzprogramme weiterentwickelt werden. Hier ist ein grundlegender Rahmen, der diesen Wandel begleitet:

1. Inventar-KI im gesamten Unternehmen

- Identifizieren Sie, wo KI entwickelt, eingesetzt oder damit experimentiert wird

- Katalogisieren Sie Datensätze, die für Training, Feinabstimmung und Inferenz verwendet werden

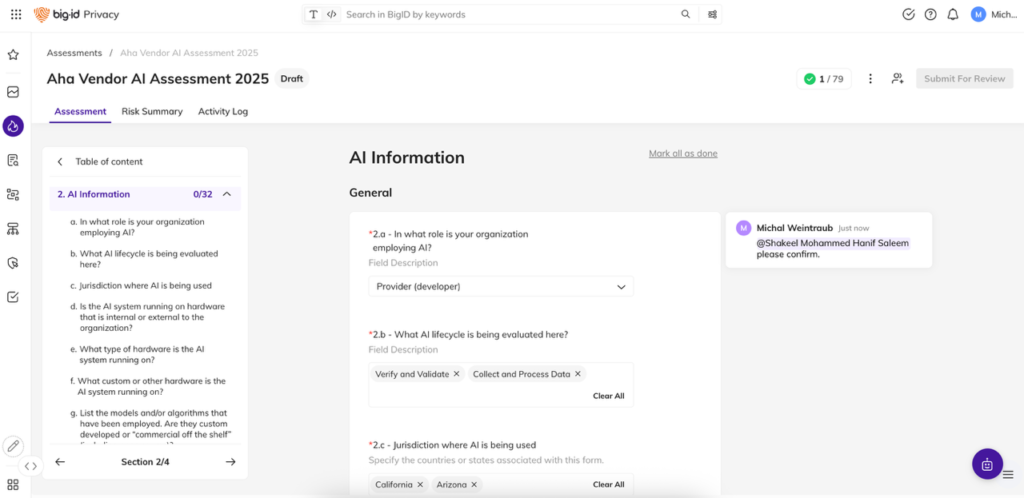

2. Integrieren Sie Datenschutzprüfungen in den KI-Lebenszyklus

- Behandeln Sie KI wie einen Drittanbieter oder eine risikoreiche Initiative

- Führen Sie frühzeitig Datenschutzrisikobewertungen für Datensätze und Modelle ein

3. Klassifizieren und überwachen Sie KI-bezogene Daten

- Verwenden Sie automatisierte Tools zum Markieren und Kennzeichnen von PII, PHI und anderen regulierten Daten

- Kontinuierliche Überwachung auf Datendrift, Offenlegung oder Richtlinienverstöße

4. Datenkontrollen einrichten und durchsetzen

- Definieren Sie Nutzungs-, Minimierungs-, Aufbewahrungs- und Aufenthaltsregeln

- Wenden Sie richtlinienbasierte Governance in den Trainings- und Inferenzphasen an

5. Bauen Sie eine funktionsübergreifende Ausrichtung auf

- Schaffen Sie eine gemeinsame Verantwortlichkeit zwischen Datenwissenschaft, Recht, Sicherheit und Datenschutz

- Schulen Sie Teams zu Datenschutzrisiken und Standardverfahren bei KI

BigID ermöglicht es Datenschutz-, Rechts- und Sicherheitsteams, den KI-Datenschutz im großen Maßstab zu operationalisieren.

Die richtige Strategie setzt die Vision, doch für deren maßstabsgetreue Umsetzung ist die richtige Plattform erforderlich. BigID unterstützt Unternehmen dabei, Datenschutz in KI-Pipelines zu operationalisieren und Governance durchsetzbar, auffindbar und überprüfbar zu machen.

BigID hilft Ihnen:

- Entdecken Sie vertrauliche Daten in KI-Pipelines: Scannen Sie automatisch strukturierte und unstrukturierte Daten, die beim Training, Tuning und bei der Inferenz verwendet werden, einschließlich Dateien, Text, Protokollen und APIs.

- Klassifizieren Sie personenbezogene und regulierte Daten präzise: Identifizieren Sie PII, PHI, Finanzinformationen und andere sensible Attribute mit KI-gestützten Klassifizierungsmodellen, die auf echte Datenschutzergebnisse trainiert sind.

- Setzen Sie Richtlinien zur Datenminimierung und -aufbewahrung durch: Definieren und automatisieren Sie Kontrollen, sodass nur die richtigen Daten verwendet und nur so lange wie nötig aufbewahrt werden.

- Schatten-KI und nicht autorisierte Modelle verwalten: Decken Sie nicht genehmigte KI-Aktivitäten, Datenmissbrauch und blinde Flecken auf, bevor diese zu Compliance-Verstößen oder Markenschäden führen.

- Aktivieren Sie „Privacy by Design“ in allen Teams: Geben Sie Rechts- und Datenschutzteams kontinuierliche Transparenz darüber, wo Daten verwendet werden, wie sie verwaltet werden und welche Richtlinien gelten, ohne die Innovation zu verlangsamen.

Das Ergebnis: Sie reduzieren das Risiko, ermöglichen konforme KI im großen Maßstab und stärken das Vertrauen der Verbraucher, indem Sie personenbezogene Daten von Anfang an schützen.

Datenschutz darf bei KI keine Nebenrolle spielen – BigID macht ihn zu einem eingebauten Vorteil.

Demo buchen um zu sehen, wie BigID zum Schutz personenbezogener Daten in Ihren KI-Systemen beiträgt und konforme, vertrauenswürdige Innovationen im großen Maßstab ermöglicht.