AI Asset-Inventarisierung

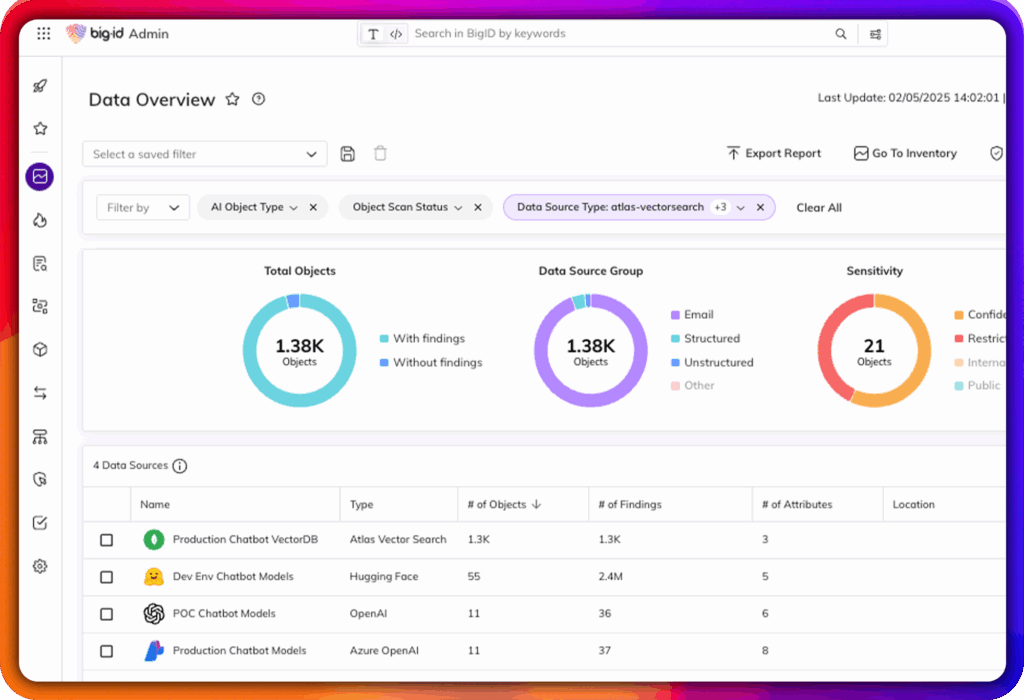

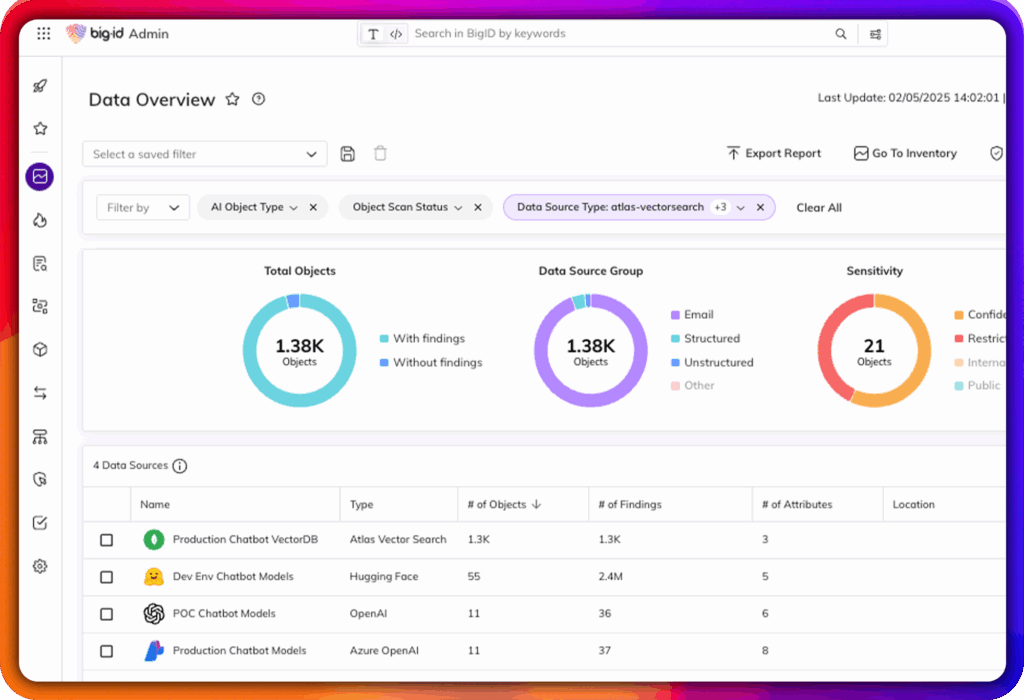

Erkennen und katalogisieren Sie automatisch Ihre KI-Modelle, Trainingssätze, Datensätze, Copiloten und KI-gesteuerten Anwendungen in Cloud-, SaaS- und On-Premise-Umgebungen.

Automatische Erkennung von KI-Modellen, Kopiloten und Tools von Drittanbietern, die verwendet werden - genehmigt oder nicht

ein zentrales, ständig aktualisiertes Inventar von KI-Systemen und zugehörigen Daten zu führen

Verfolgen Sie, wo die Modelle eingesetzt werden, welche Daten sie verwenden und wie sie angewendet werden.

Definieren und erzwingen Sie rollenbasierten Zugriff auf Trainingsdaten, Prompts, Ausgaben und Modelle

Identifizierung und Eindämmung von Nutzern mit zu vielen Berechtigungen und der Nutzung von Schatten-KI

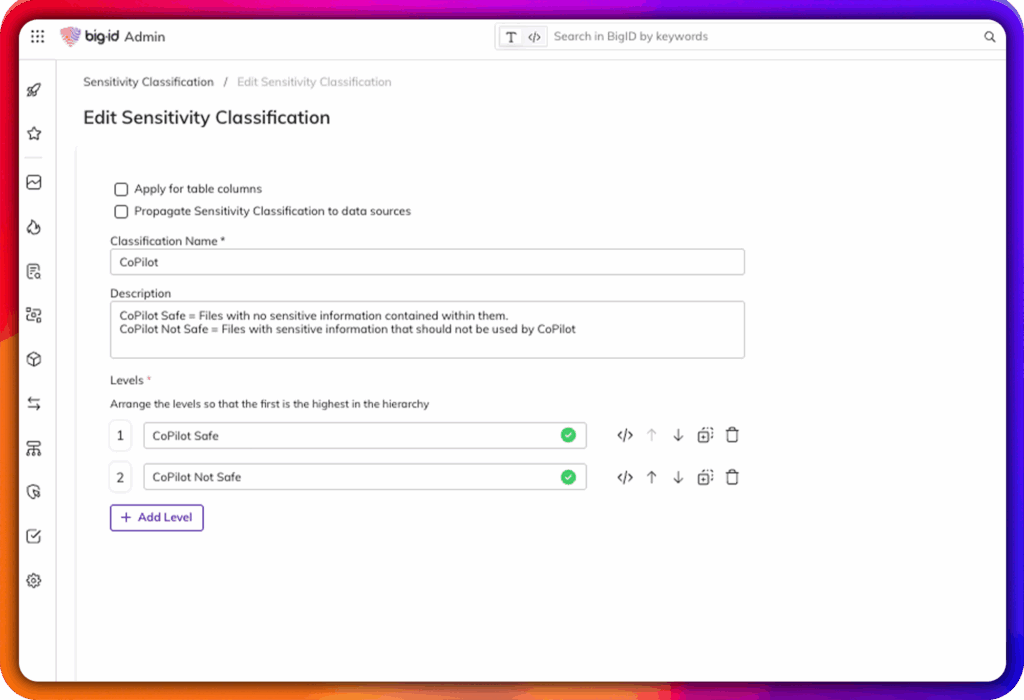

Beschränkung des KI-Zugangs auf genehmigte, konforme und zweckgebundene Datensätze

Anwendung von Governance-Kontrollen von der Datenaufnahme bis zur Modellbereitstellung und -nutzung

Überwachung auf KI-spezifische Risiken: Verzerrungen, Datenlecks, Richtlinienverstöße und unbefugte Nutzung

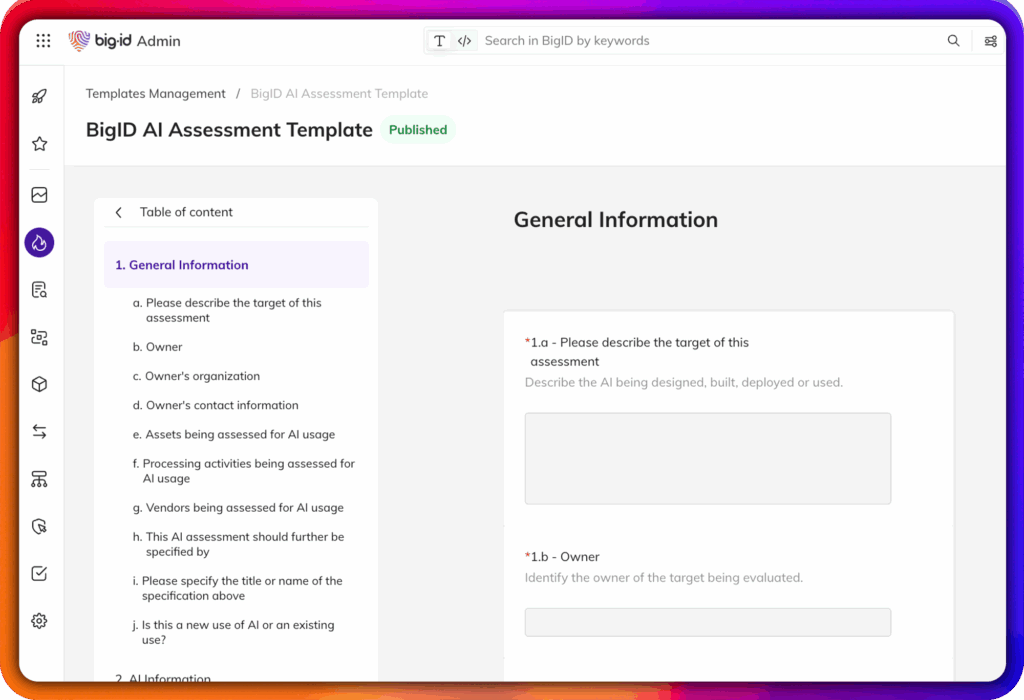

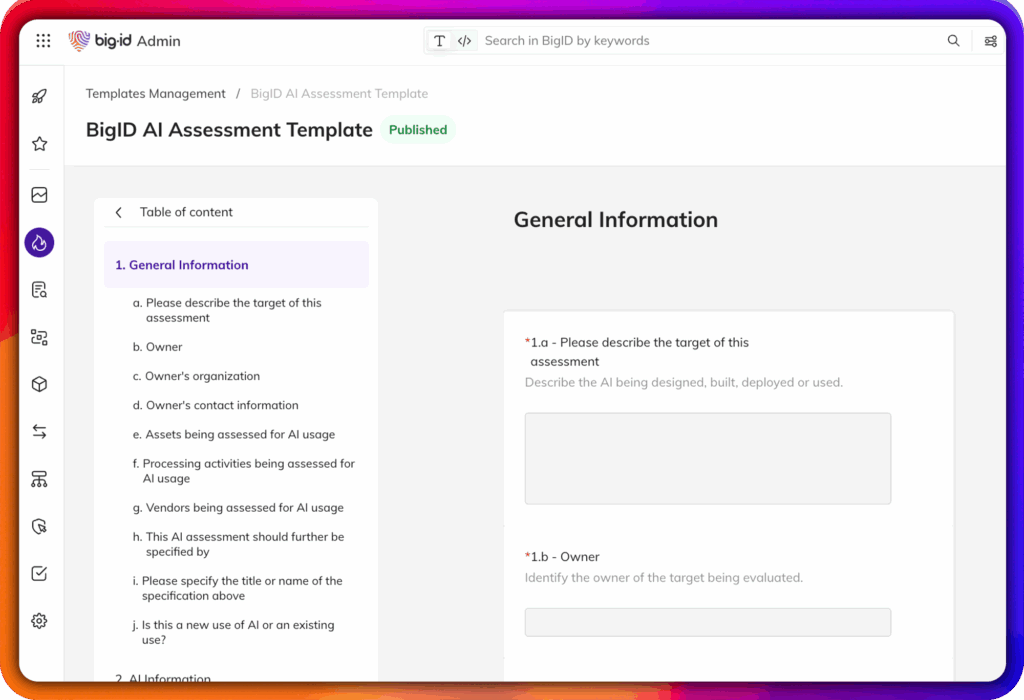

Automatisieren Sie die Einhaltung globaler Rahmenwerke wie NIST AI RMF, EU AI Act und ISO 42001

BigID operationalisiert das KI-Vertrauens-, Risiko- und Sicherheitsmanagement mit echten Fähigkeiten - nicht mit Vaporware:

Erkennen und katalogisieren Sie automatisch Ihre KI-Modelle, Trainingssätze, Datensätze, Copiloten und KI-gesteuerten Anwendungen in Cloud-, SaaS- und On-Premise-Umgebungen.

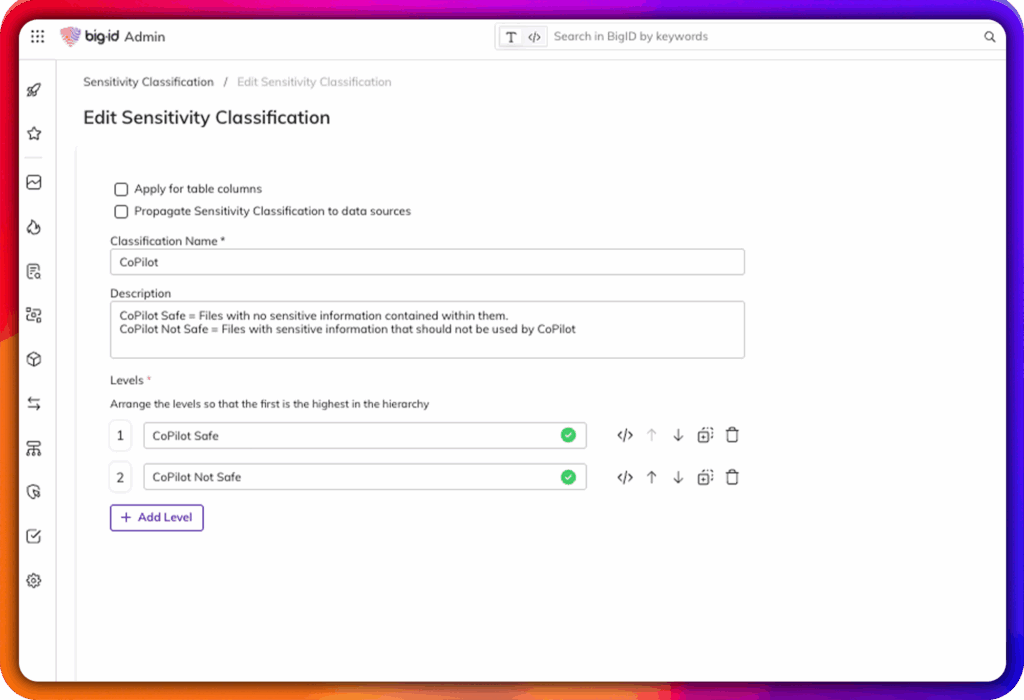

Identifizieren und klassifizieren Sie die sensiblen, regulierten oder kritischen Daten, die in KI-Modelle einfließen, und sorgen Sie so von Anfang an für eine verantwortungsvolle KI.

Kontrollieren und überwachen Sie, wer auf Modelle, Trainingsdatensätze und KI-Pipelines zugreifen darf. Erzwingen Sie minimale Rechte und Nullvertrauen in KI-Umgebungen.

Aufdecken von nicht autorisierten KI-Aktivitäten, abtrünnigen Copiloten und versteckten Modellimplementierungen, die Risiken verursachen oder gegen Richtlinien verstoßen könnten.

KI-spezifische Richtlinien für den Datenschutz, die Datenhaltung, die Sicherheit und die Einhaltung gesetzlicher Vorschriften - einschließlich neuer Rahmenwerke wie dem EU AI Act, NIST AI RMF und ISO/IEC 42001.

Erkennen Sie Schwachstellen, wie z. B. übermäßig exponierte Trainingsdaten, unsicheren Zugriff oder verzerrungsanfällige Datensätze, und ergreifen Sie Maßnahmen, um Probleme zu beheben, bevor sie eskalieren.

Verfolgen Sie den Ursprung, die Entwicklung und die Verwendung von Trainingsdaten und -modellen und unterstützen Sie so transparente, erklärbare KI-Praktiken für Audits, Compliance und Governance.

Warnen Sie vor Zugriffsverletzungen, KI-Schattenrisiken, Richtlinienverstößen und neuen Bedrohungen für Ihre KI-Umgebungen und ergreifen Sie entsprechende Maßnahmen.

Ermöglichen Sie vertrauenswürdige, verantwortungsvolle und widerstandsfähige KI-Innovationen mit AI TRiSM von BigID.