As falhas de segurança de IA mais perigosas de 2026 não se parecerão com violações. Elas se parecerão com o cotidiano: modelos treinados com dados sensíveis que nunca deveriam ter visto, identidades não humanas silenciosamente privilegiadas em excesso e IA Agentica agindo desenfreadamente sobre os dados sem governança, contexto ou restrição.

À medida que as empresas implementam IA em larga escala, o risco está migrando da perda de dados para o uso indevido de dados. Dados sensíveis usados para treinar e alimentar a IA — registros de clientes, propriedade intelectual, dados financeiros — agora podem ser acessados, recombinados e manipulados mais rapidamente do que os humanos conseguem intervir. Some a isso identidades não humanas não gerenciadas e uma Gestão de Postura de Segurança de IA (AISPM) ainda em desenvolvimento, e o impacto se torna sistêmico.

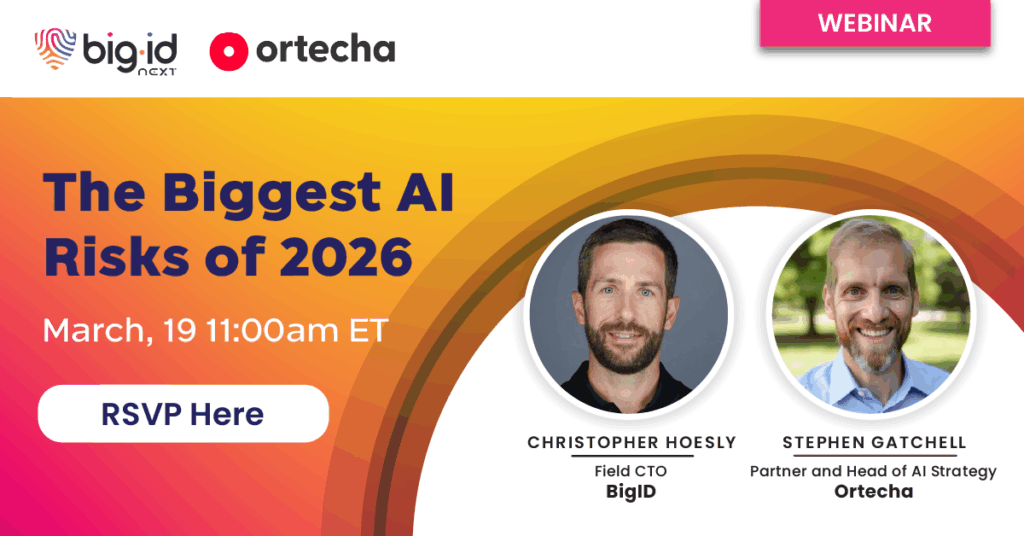

Neste webinar, vamos analisar os maiores riscos de segurança da IA que moldarão o ano de 2026, incluindo:

- Como os dados sensíveis usados no treinamento de IA se tornam um passivo a longo prazo

- Por que o acesso à identidade não humana é a área de risco que cresce mais rapidamente – e é a menos regulamentada?

- Onde a AISPM se encaixa (e onde falha) na prevenção do uso indevido no mundo real

- Como controles de acesso frágeis e má higiene de dados transformam a IA em um multiplicador de riscos.

Você sairá com uma estrutura prática para governar como os sistemas de IA usam dados, para que as equipes de segurança possam passar de controles reativos para a prevenção proativa do uso indevido.