Introdução: A inovação em IA depende da confiança.

A IA está passando rapidamente da fase de experimentação para a implementação em escala empresarial. Os modelos são treinados, ajustados e implementados mais rapidamente do que nunca — frequentemente utilizando grandes quantidades de dados pessoais e sensíveis. Mas, à medida que os sistemas de IA se tornam mais poderosos, o mesmo acontece com a segurança e a confiabilidade dos dados. responsabilidade Garantir que a utilização dos dados respeite as escolhas individuais e os compromissos éticos.

Para os líderes em privacidade, isso cria um novo desafio: como garantir que o consentimento do usuário seja respeitado não apenas nas políticas, mas na prática — em todos os sistemas e fluxos de trabalho de IA?

Hoje, estamos anunciando o Consentimento para IA, um novo recurso do BigID projetado para ajudar as organizações a operacionalizar o consentimento em todo o uso de dados de IA e manter a confiança à medida que a adoção da IA se acelera.

O problema: o consentimento termina onde a IA começa.

A maioria das organizações possui processos consolidados para coletar e gerenciar dados. preferências de consentimento. Mas, uma vez que os dados pessoais entram nos fluxos de trabalho da IA — desde os dados de treinamento até a inferência e os aplicativos subsequentes — os sinais de consentimento muitas vezes perdem a visibilidade.

As equipes de privacidade se veem diante de questões cruciais:

- O consentimento para o uso dos dados neste modelo foi revogado?

- São opções de exclusão refletido de forma consistente em todos os sistemas de IA?

- Podemos afirmar com segurança que a IA não está usando dados pessoais quando os usuários optaram por não participar?

Sem respostas claras, as organizações enfrentam pontos cegos que podem minar a confiança, os compromissos com a IA responsável e a credibilidade das iniciativas de IA.

Apresentando o Consentimento para IA

O Consent for AI preenche a lacuna entre a gestão do consentimento e a utilização de dados de IA.

Ao conectar a inteligência de consentimento diretamente aos sistemas de IA, dados e rastreamento de riscos, a BigID ajuda as equipes de privacidade a detectar quando o consentimento é retirado e a garantir que as opções de exclusão sejam identificadas, rastreadas e tratadas em todos os ambientes de IA.

Em vez de depender de revisões manuais ou documentação estática, o Consent for AI oferece visibilidade contínua de como as escolhas de consentimento impactam o uso de dados de IA, permitindo que as equipes ajam antes que os problemas se tornem públicos, internos ou representem riscos à reputação.

Como funciona o consentimento para IA

O consentimento para IA amplia o escopo do BigID. Consentimento Universal Plataforma com aplicação e monitoramento baseados em IA, permitindo que as equipes de privacidade gerenciem o consentimento onde o risco de IA realmente ocorre.

As principais funcionalidades incluem:

- Aplicação do consentimento com reconhecimento de IA para detectar quando os dados pessoais ainda podem ser usados em sistemas de IA após a revogação do consentimento.

- Gerenciamento automatizado de revogação de consentimento por IA para eliminar fluxos de trabalho manuais e inconsistências.

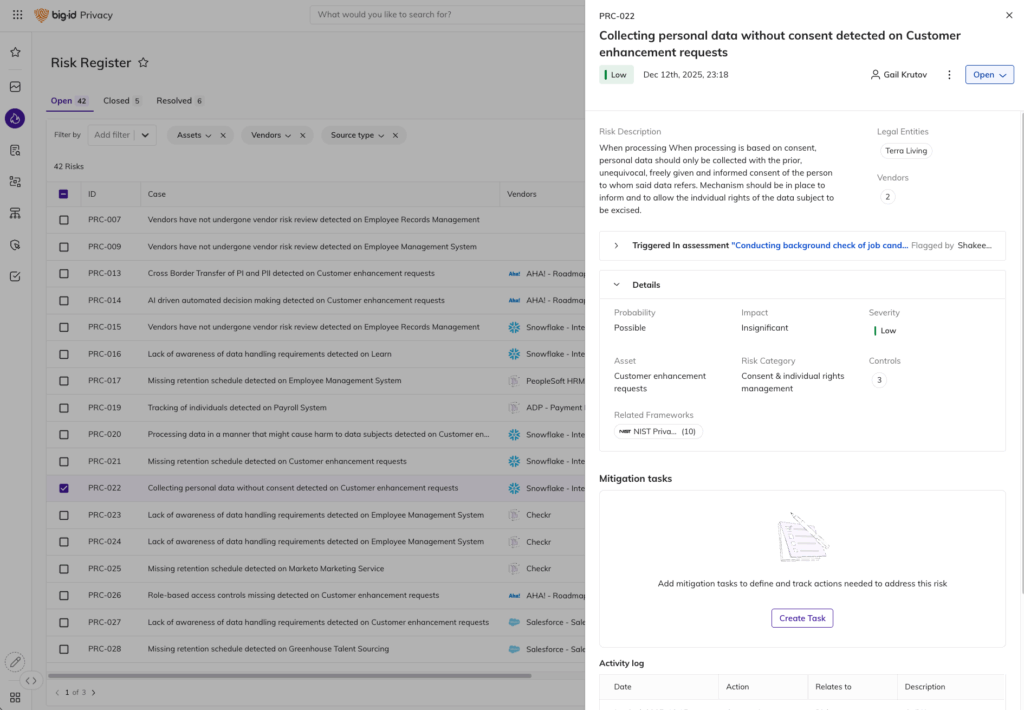

- Inteligência integrada de consentimento e risco que sinaliza desativações não gerenciadas de IA como riscos.

- Monitoramento contínuo para uso não autorizado de dados de IA

- Painéis de relatórios que fornecem visibilidade executiva sobre a postura de consentimento da IA e o status de remediação

Por que isso importa agora?

À medida que a IA se torna parte integrante das operações comerciais essenciais, as expectativas em relação à transparência e à responsabilidade aumentam — por parte de clientes, funcionários, parceiros e conselhos de administração. Mesmo com a evolução das abordagens regulatórias, a confiança permanece inegociável.

O Consentimento para IA oferece às organizações uma maneira de demonstrar práticas responsáveis de IA na prática — e não apenas em teoria. Ao garantir que os dados pessoais não sejam usados em sistemas de IA quando os usuários optarem por não participar, as equipes de privacidade podem apoiar a inovação sem sacrificar a confiança ou o controle.

Criado para líderes em privacidade

O Consentimento para IA foi desenvolvido para Diretores de Privacidade e equipes de privacidade que precisam de mais do que documentação. Isso restaura o controle, a visibilidade e a confiança, tornando o consentimento uma parte operacional da governança da IA — e não um registro desconectado.

Com o Consentimento para IA, as equipes de privacidade podem:

- Apoie com confiança as iniciativas de IA.

- Reduzir o risco oculto de privacidade da IA

- Demonstrar uso ético e transparente de dados.

- Reforçar a confiança com clientes e partes interessadas.

Avançando com IA Responsável

A IA continuará a evoluir — e as expectativas em relação ao uso de dados pessoais também. O Consentimento para IA ajuda as organizações a se manterem na vanguarda, incorporando o consentimento ao próprio uso de dados pela IA, garantindo que as práticas responsáveis sejam escaláveis juntamente com a inovação.

Visita Consentimento para IA Para saber mais ou Agende uma demonstração individual. Com nossos especialistas em privacidade e segurança.