A inteligência artificial (IA) é tão segura quanto os dados com os quais é treinada. No entanto, a maioria das organizações ainda depende de conjuntos de dados brutos e não filtrados, repletos de informações sensíveis, pessoais ou regulamentadas. Esses dados, uma vez inseridos em um modelo, podem levar à injeção imediata de vulnerabilidades, vazamento de informações, acesso não autorizado e graves riscos de conformidade.

A BigID está mudando isso.

Estamos muito animados para apresentar Limpeza de dados para IA, uma nova e poderosa capacidade que ajuda as organizações Remover conteúdo de alto risco de conjuntos de dados de IA antes que se torne um problema. Com este lançamento, o BigID oferece às equipes de segurança e governança uma maneira de automatizar redigir ou tokenizar dados sensíveis em fontes estruturadas e não estruturadas, ajudando as equipes a construir.

O desafio: os pipelines de IA são pipelines de risco.

As empresas estão expandindo a IA de geração de dados para toda a organização, mas a maioria ainda não possui mecanismos de controle para os dados que alimentam os modelos. Isso cria um ponto cego: uma vez que dados sensíveis entram em um prompt, um copiloto ou um conjunto de treinamento, é quase impossível contê-los.

As equipes de segurança precisam de uma maneira de limpar esses dados antes que eles cheguem ao modelo.

Até agora, isso tem sido feito manualmente, de forma inconsistente e pouco confiável.

A resposta da BigID: Limpe os dados antes que a IA os utilize.

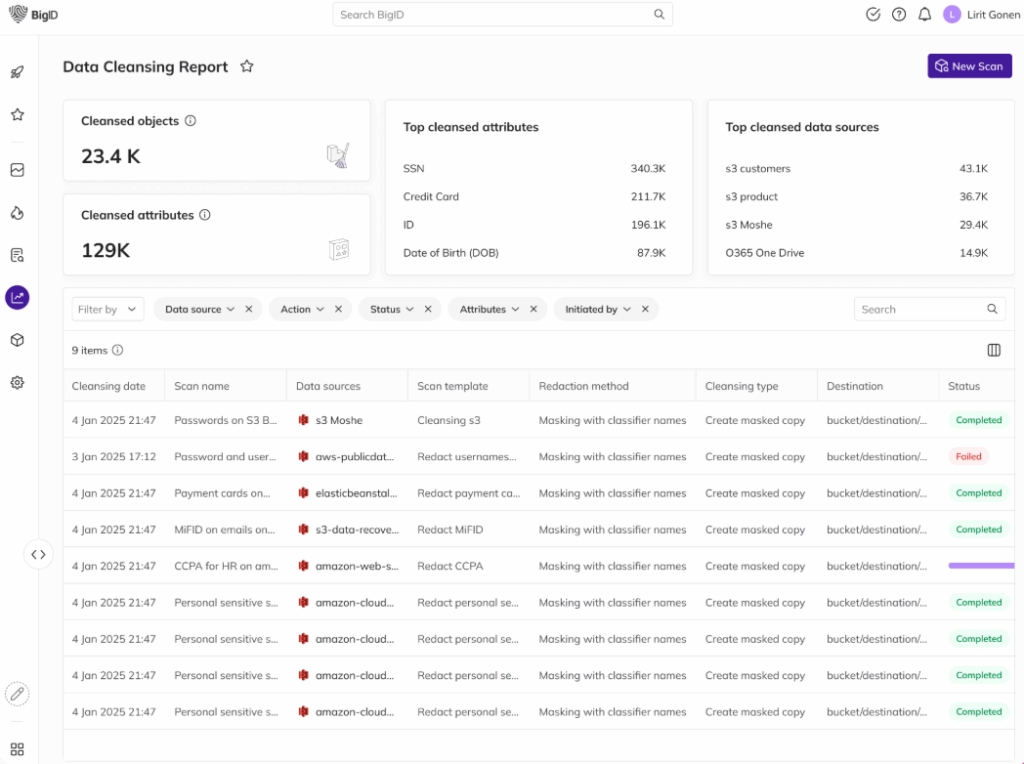

A solução Data Cleansing for AI resolve esse problema, oferecendo às equipes uma maneira escalável de pré-processar conjuntos de dados com governança integrada.

- Automaticamente detectar e classificar dados pessoais, sensíveis ou regulamentados

- Escolha redigir ou tokenizar o conteúdo—preservar a utilidade sem expor o indivíduo ao risco

- Aplique a limpeza tanto a dados estruturados quanto a dados não estruturados: e-mails, PDFs, documentos e muito mais

- Aplicar a política na origem., antes que os dados entrem em seus pipelines LLM

O resultado? Conjuntos de dados mais limpos, menor risco e maior robustez. postura de segurança da IA.

Benefícios no mundo real

- Prevenir o vazamento de dados: Impeça que informações pessoais e confidenciais sejam incorporadas nos resultados da IA.

- Proteção contra injeção imediata: Minimize o risco de injeção limpando os prompts e os arquivos de origem.

- Mantenha o contexto, não o risco: A tokenização preserva a estrutura, permitindo que os modelos continuem aprendendo de forma eficaz.

- Governança de Dados Não Estruturados: Limpar dados além dos bancos de dados — onde a maior parte do conteúdo sensível realmente reside.

- Acelere o uso da IA com confiança: Dê às equipes de IA acesso mais rápido a conjuntos de dados aprovados e confiáveis.

Por que isso importa

A inteligência artificial está avançando rapidamente, mas a segurança, a privacidade e a conformidade não podem ficar para trás.

Limpeza de dados para IA faz parte do conjunto mais amplo de soluções da BigID. Canal de dados seguro, ajudando as organizações a obterem controle sobre quais dados são descobertos, rotulados e usados na GenAI. É assim que as empresas passam de uma avaliação de risco às cegas para uma gestão eficaz. governança proativa—sem bloquear a inovação.

Veja em ação

Quer ver como funciona a limpeza de dados para IA? Agende uma reunião individual com um de nossos especialistas em segurança de dados hoje mesmo!